Far-right groups are leveraging artificial intelligence to spread deepfake videos of Adolf Hitler and other pro-Nazi imagery in an attempt to generate sympathy for the tyrant, according to a recent report by the UK-based non-profit Institute for Strategic Dialogue (ISD).

Founded in 2006, the institute is a political advocacy organization that tracks disinformation and hateful speech online. According to its most recent report, the ISD noted that AI voice generators were used to create English-language versions of Hitler’s speeches, with these videos accumulating over 50 million views across X, YouTube, TikTok, and Instagram in 2024.

“Social media platforms have long struggled with the spread of neo-Nazi ideologies, but the direct glorification of Hitler and the revisionism surrounding his role in the Holocaust present a new, unique threat,” the Director of Technology at the US branch of the institute, Isabelle Frances-Wright, told Decrypt. “AI has further exasperated this threat, enabling bad actors to disseminate Hitler’s rhetoric to a broader and younger audience.”

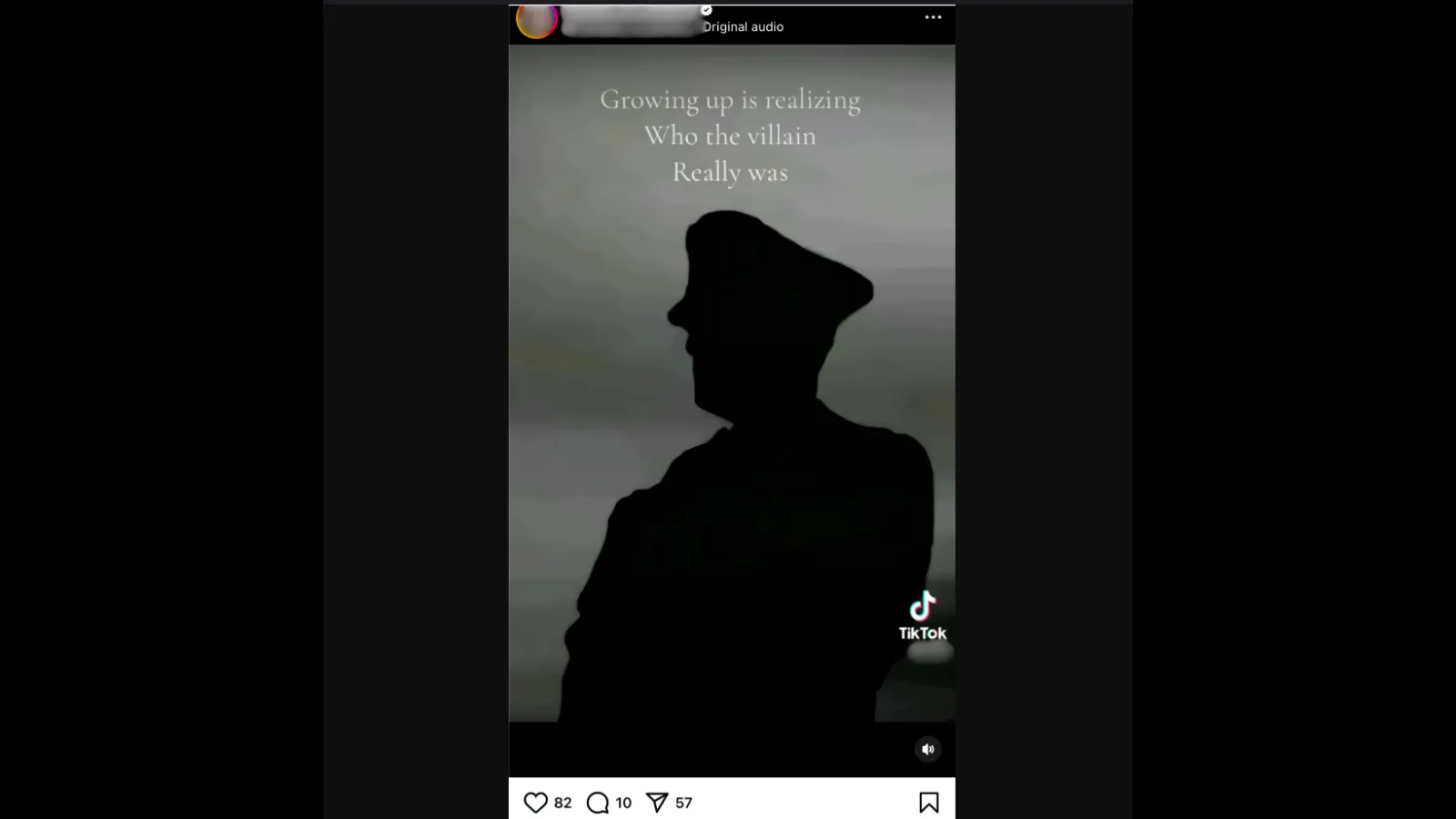

The ISD reported that many TikTok videos featured 20-30-second clips of Hitler’s speeches in English with background music, promoting antisemitic, anti-Muslim, anti-immigrant, and white supremacist messages.

“Hateful content, whether legal or not, tends to go viral on most social media platforms,” UCLA Professor of Information Studies Ramesh Srinivasan told Decrypt.

Since August, videos and posts of Hitler and Nazi iconography have garnered over 28.4 million views on X, TikTok, and Instagram, the report said.

“Analysts were able to easily detect content on X that was likely violative of its policies, with just 11 posts receiving more than 11.2 million views over a one-week period,” the report said. “This content was more readily available than on other platforms via basic searches.”

In a 2023 update to its policies, X said the platform prohibits “hateful content,” including incitement, slurs, dehumanization, and hateful imagery, including profile pictures, usernames or profile bios.

“The classic challenge we’ve been trying to regulate is the role of algorithms,” Srinivasan explained. “These computational rules apply to all data and posts, and are trained to push content that is more likely to go viral. As a result, the most visible content is often the most harmful.”

Meta, TikTok, and Google did not immediately respond to requests for comments by Decrypt.

Srinivasan said that who was ultimately creating the content was likely a blend of troublemakers and would-be political manipulators: “On one level, there is the hacker; on another, more sophisticated, psychometric and psychographic targeting.” he said, referencing the role Cambridge Analytica played during the 2016 U.S. Presidential election.

“That’s a huge part of what powers the internet right now,” he said.

“It has real material effects, especially in places without a strong media,” he said. “Disinformation, hate speech, and fringe speech promoted by those with significant followings are intentionally crafted to go viral. This type of content captures attention, but it is also tearing us apart.”